About Me

Hi, I'm Atiksh and am currently a Senior at Cornell University studying Computer Science. I'm currently applying for CS PhD positions across the country. At Cornell, I'm part of the

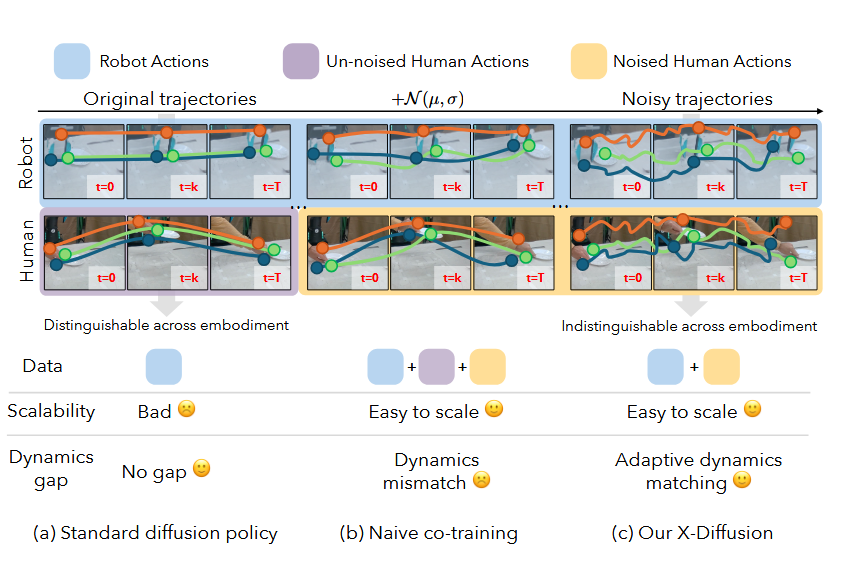

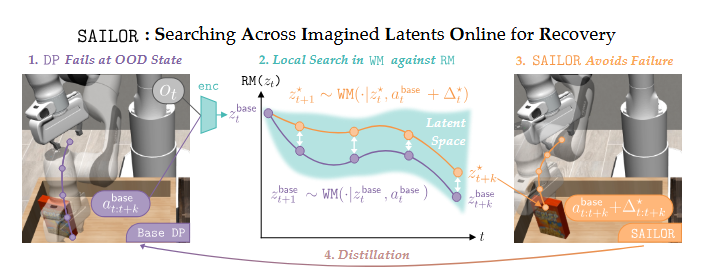

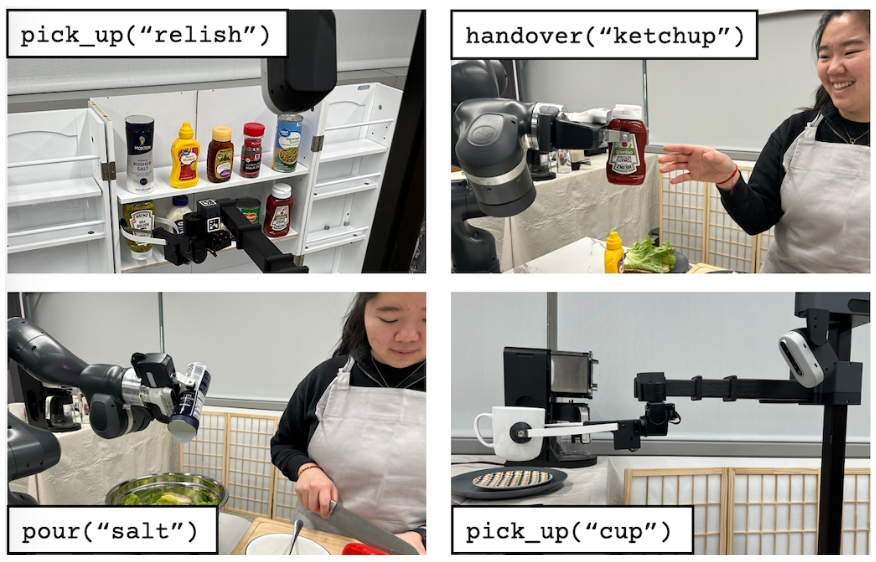

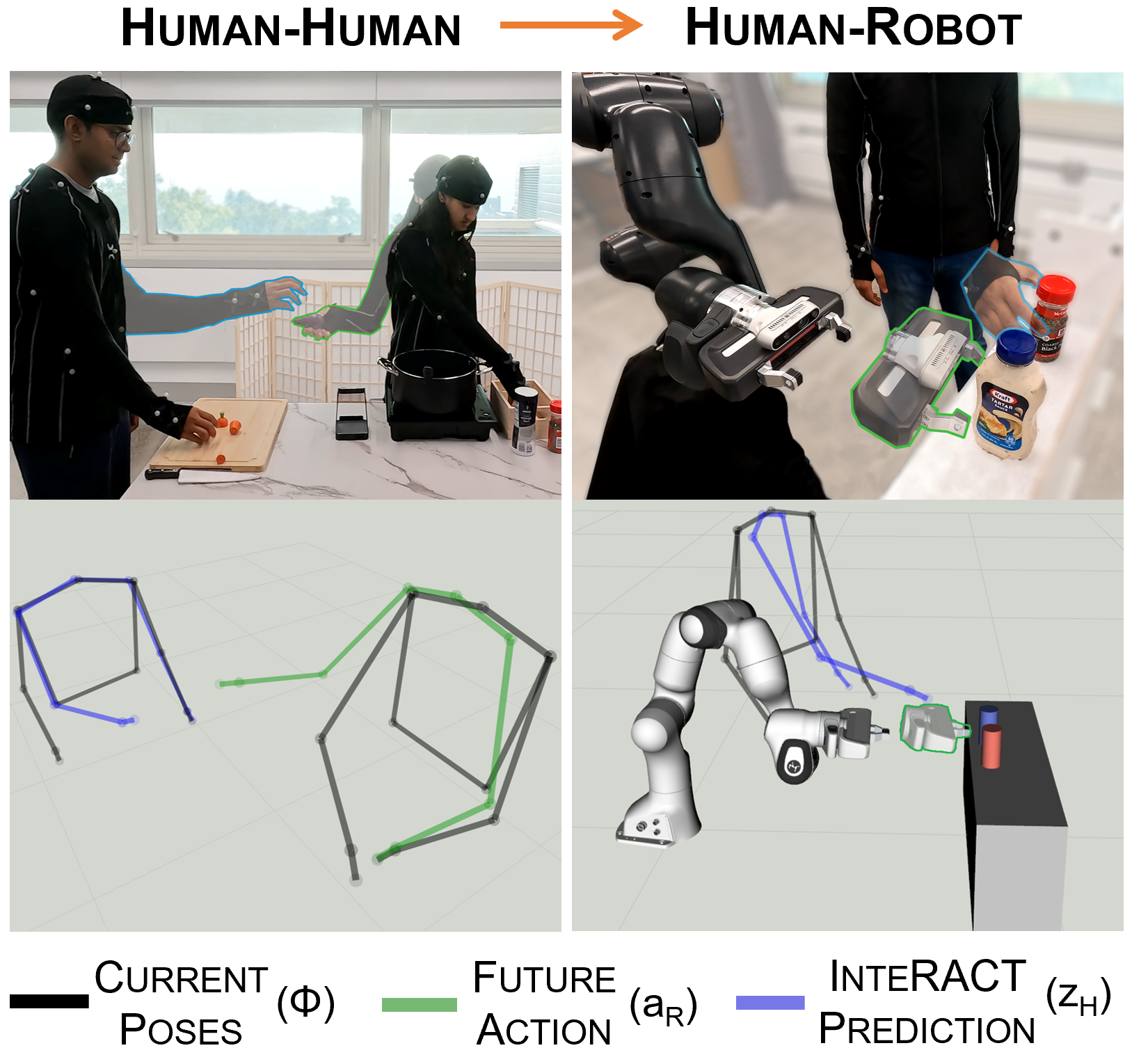

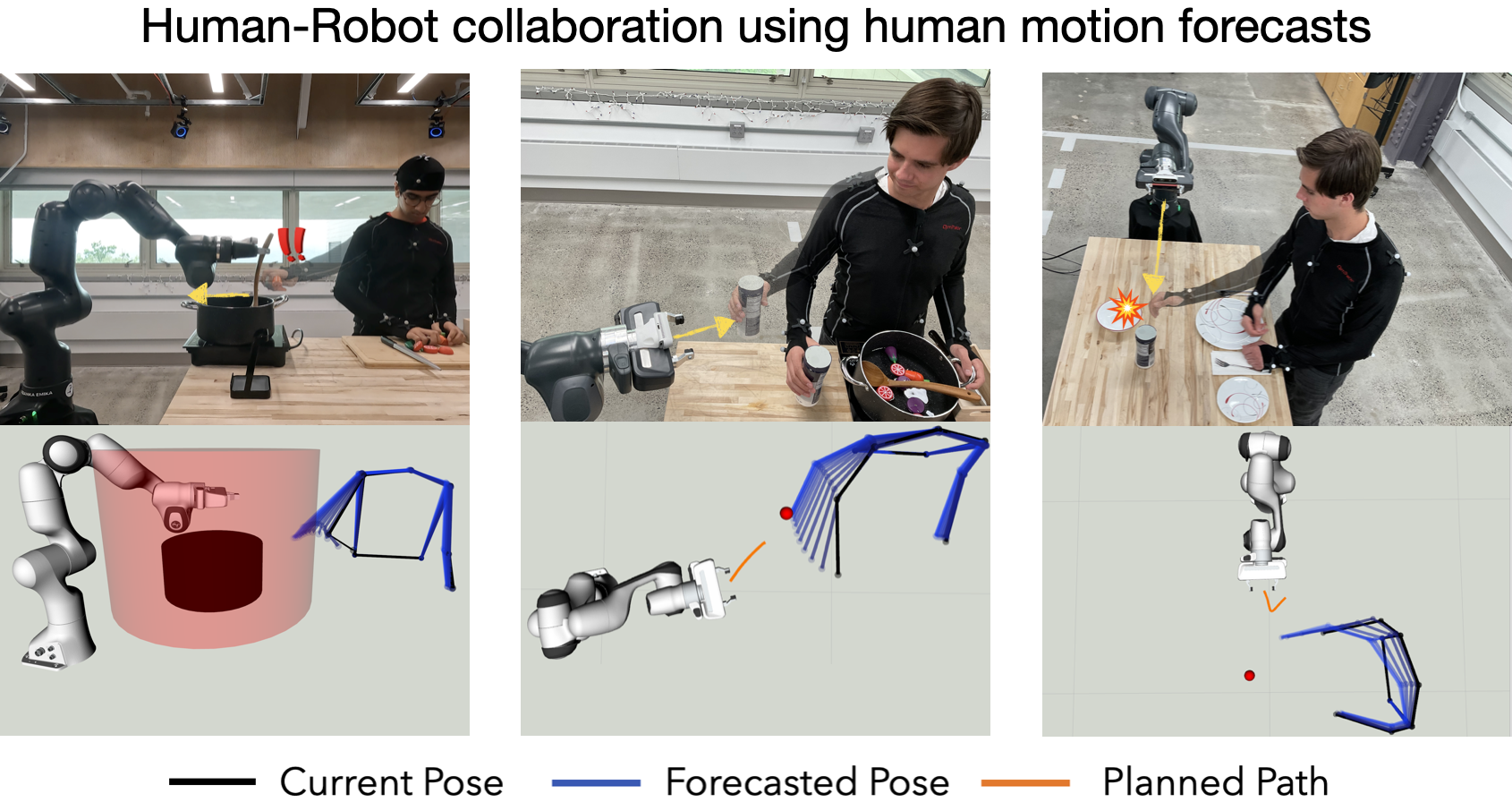

PoRTaL research group advised by Prof. Sanjiban Choudhury.I enjoy finding new methods of teaching robots and working robotics systems and am interested in Imitation Learning, Reinforcement Learning, and Human-Robot Interaction.